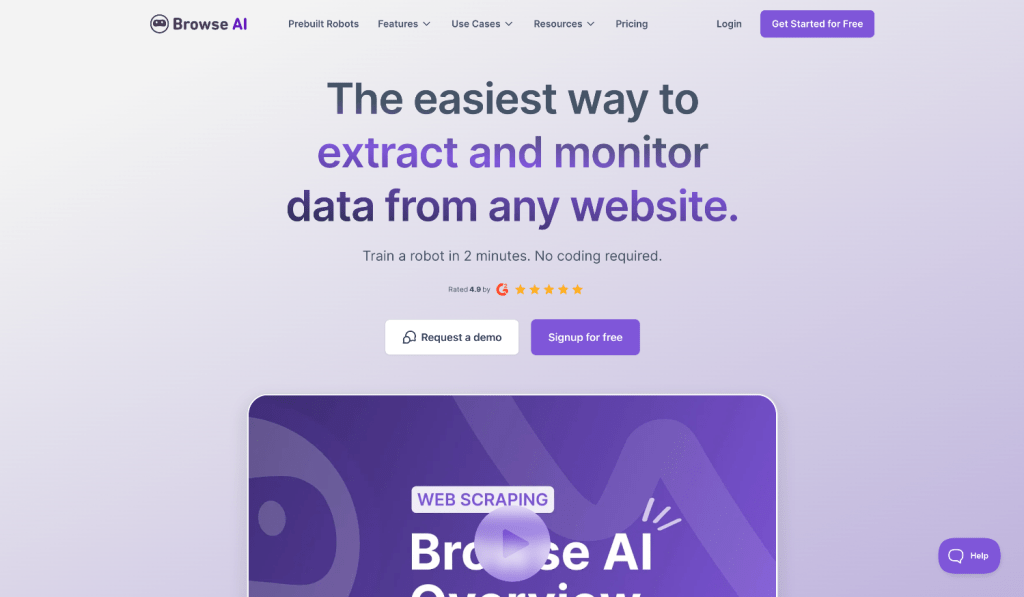

Browse ai is a web scraping tool that allows users to automate the extraction of data from websites without needing to write code. It uses a simple point-and-click interface to let users select elements on a webpage, and then it builds a “robot” that can automatically collect data on a recurring schedule or on demand.

The key features app of Browse.ai include:

- No-Code Web Scraping:

- Users can set up web scraping tasks without writing code by using an intuitive point-and-click interface.

- Data Extraction Templates:

- Pre-built templates for common data scraping tasks, such as extracting product prices, social media posts, or news articles from specific websites.

- Scheduled Automations:

- Users can schedule automated scraping tasks at specific intervals (e.g., daily, weekly) to collect data continuously.

- Change Detection:

- Browse.ai can monitor specific elements on a webpage and notify users when changes occur (e.g., price drops, content updates).

- Data Export Options:

- Scraped data can be exported to formats such as CSV, Excel, Google Sheets, or integrated into other applications via APIs or webhooks.

- Visual Web Automation:

- Browse.ai visually records user interactions with websites, such as clicking buttons or navigating through pages, to automate complex workflows.

- Cloud-Based Platform:

- All scraping and data extraction tasks are performed on Browse.ai's cloud infrastructure, meaning users don’t need to run the processes locally on their machines.

- Multiple Data Integration Options:

- Integrates with various tools such as Zapier, Google Sheets, and other third-party APIs for easier data management and automation.

- Easy to Share Robots:

- Users can share the scraping robots they've built with others, making it simple for teams to collaborate.

- Flexible Pricing:

- Offers different pricing plans based on the frequency and amount of data scraping needed, including a free tier for smaller-scale tasks.

These features app make Browse.ai accessible to a broad range of users, from beginners looking to collect basic web data to professionals needing complex, scheduled scraping tasks.